AI: The Great Un(Re)doing

Who am I and what right or qualifications do I have to tell you anything about AI and the impact it has and will have on human beings?

I’ve sat on this question for a couple of weeks. And yet, there’s a small poking sensation in the back of my head saying, “This is it; you know this is what you want to write about”. So here we are - me typing this and you reading it.

With that - hello, my name is Arynn, and I am a data advocate that has worked in the data space for over a decade. Am I an expert on AI and Large Language Models (LLMs)? No, certainly not. But perhaps, like the vast majority of people working in data, I am a consumer and user of tools that utilize these types of functions.

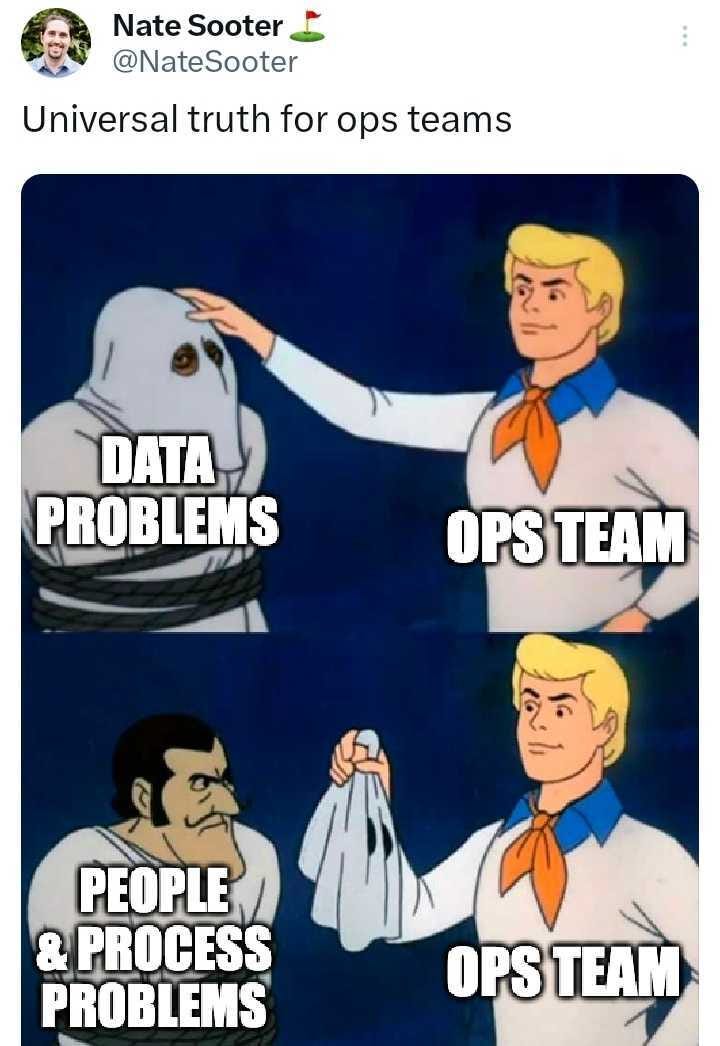

It is probably worth noting that my views and opinions are flavored by my experience prior to working in data. Educationally, I studied industrial-organizational psychology. What that essentially means is that I tend to see the people problems that are often lurking behind the data problems. This is not revolutionary, and there’s been some great memes from other people stumbling onto the exact same conclusion.

The People Problem

How are AI (and LLMs and GPT and all those other offshoots) a people problem?

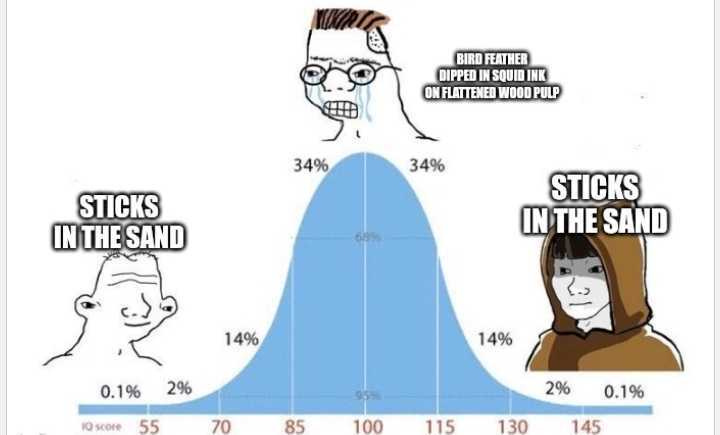

Every new technological advancement since the dawn of humanity has been a people problem. This is nothing new. In fact, humans have been evolving and adapting throughout all of recorded history. If you compare how you live your average day to the average day of someone 1,000 years ago, the tools you use and the way you execute various processes are drastically different. But humans are tricky; despite all of these evolutions, we are incredibly naturally resistant to change. Only some changes stick. Others, we make fun of as mid-IQ fodder.

Human behavior and, more broadly, social patterns are incredibly hard to measure and predict. When does the seemingly harder and more complicated way become the easy and popular way? What increases the chances of “the next new thing” having any staying power? Even the experts don’t have a consensus for answering those questions. However, we keep on changing, adapting and evolving nonetheless. There’s a lot of stacked complexity that went into the ability to send someone a Slack message, but I think it’s safe to say that people are opting to do that versus sending their colleague a message over snail mail.

AI and the Data Space

So let’s take a step away from the meta view, and dive into the more granular changes we see happening in the data space - namely, with the rapid advancements and adoption of AI.

In recent years, there has been a growing concern that AI will render various roles in the data and technology space obsolete, leaving countless professionals jobless. History has shown us that as humans, we have always adapted and reshaped our work in response to new technologies. Similarly, AI presents us with an opportunity to redefine and enhance the way we operate. AI is already reshaping industries and altering the nature of work.

So is AI the great undoing of jobs as we know them? Are all of the data folks going to lose their jobs or become redundant? Are machines going to take over the work and destroy humanity as we know it.

Yes. Yes to all of it.

But we have done this before! Millions of times over thousands of years! Perhaps it is my own personal twinge of optimism, but if we use the prediction of how we adopted massive change in the past to predict how we adopt massive change now, my bet is that we will persevere. What will that look like, exactly? I don’t know. But also, nobody knows. We almost never do. As I alluded to before, we are incredibly bad at predicting societal adoption and change. But you know what we are good at?

Re-doing - re-doing things, but in a different and better way. So will AI be the great undoing? Perhaps, if you want to semantically call it that. But I believe in my core that we will be ripping, replacing, and re-doing as we have always done and always will do. AI is not the great undoing; rather, it is the great re-doing of roles and functions.

Bonus content: Some ways I have used ChatGPT.

Giving GPT the prompt of an entire article I (or someone else) has written, and saying “make a tweet about this”. It even give you emojis. It’s great.

The reverse - Giving GPT a short prompt and asking it to write an entire article about it. To humor myself, I actually did that for this very article! Was anything you just read written by GPT? Actually, no. However, when you’re stuck in writer’s block (or just want some inspiration), the output can give you just the right amount of “I wouldn’t write it like that” spite to fuel you. It follows the law of the internet - write something so bad and wrong that people will be forced to interact to disagree with you.